Learning from the Past: Remembering the Millennium Bug

- Jonathan Dean

- Jul 21, 2025

- 7 min read

It is 25 years since the world entered the 21st century. Technically, the new century began on 1st January 2001 (the Gregorian calendar having no ‘year 0’), however at the time, all eyes were on the moment when the calendar switched over from the 1900’s to the 2000’s and despite the minor factual inaccuracy, this period was widely promoted as the ‘new millennium’. For those of us that were there it was a time of relative optimism for the future and a massive cause for celebration around the globe. All major cities were vying to outdo themselves on the size and spectacle of the celebrations they had planned to usher in the new era.

Against this carnival backdrop however and causing not a little anxiety in many parts of the world, was a hidden spectre that had been causing concern for many years in the run up to the year 2000. Known commonly as ‘The Millennium Bug’ (or simply as ‘Y2K’ – the abbreviated form of the ‘Year 2000’).

The Millennium Bug was deceptively simple to understand but the implications of it were far reaching. In the early years of digital computing, storage space was generally a scarce resource and highly expensive, so to save space and simplify code, date information was often represented as 2 digits (i.e. ‘1986’ would be represented as just ‘86’). Moreover, many systems were developed with a tacit expectation that they would have a limited lifespan after which they would be replaced by newer, more capable systems, so dates beyond the year 2000 were often not even a consideration.

Developers in the 60’s, 70’s and early 80’s didn’t give much thought to what would happen to their systems when the year clicked over from 1999 to 2000 – until that started to become a very real possibility. Older legacy systems which might have been expected to have been replaced had been left largely untouched since they were first created, the investment needed to replace or update them seldom being prioritized over the many other demands on their organization’s resources.

It wasn’t until quite late in the 1990’s that the Y2K issue was really addressed in earnest and an enormous amount of effort and money was expended in correcting legacy systems and ensuring new ones did not manifest the same issue.

At the time, the news media was full of horror stories about what might happen if the Y2K bug was not corrected:

Power and Utilities systems could potentially go down leaving people without electricity and water

Banking systems would crash or would make inaccurate calculations of interest which might either bankrupt the customers or the banks themselves (or both!)

Air Traffic control systems could go down leaving planes undirected and ‘blind’ mid flight

The media was replete with daily ‘Y2K’ inspired doomsday scenarios.

The world was starting to become a much more connected place than it had been 20 years previously and hence the Y2K issue, though superficially quite simple, was unpredictable and posed a significant risk to our way of life and to the continued existence of many businesses.

Where is the Cavalry?

The problem was compounded still further by a more systemic issue that the industry had been largely oblivious to in the latter years of the old millennium – the insidious but unrelenting erosion of key technical skills that would be needed to fix the Y2K problem.

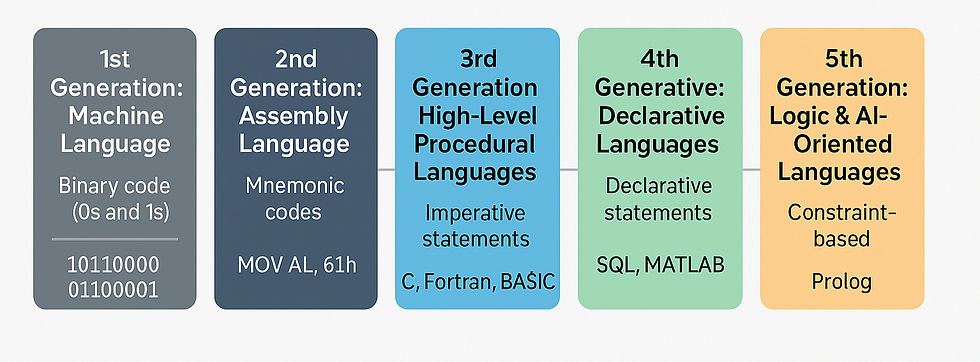

Many of the legacy systems which were most affected by Y2K were related to the government, financial and business sectors (banks, trading systems etc). At the time these systems were initially developed, the programming language of choice for thee applications was usually COBOL (COmmon Business Oriented Language), an early 3rd generation language specifically designed for business applications and large-scale data processing.

As the IT industry progressed, Programming languages evolved, and the older ones such as COBOL and FORTRAN started to be replaced as the implementation language of choice by newer 3rd and 4th generation languages. This led to a sharp decline* in the market for COBOL skills. Veteran COBOL programmers retired, and younger developers saw it as antiquated and unfashionable so focused on newer languages. The once ‘must-have’ skill faded away into relative obscurity.

(*N.B. the above description refers to the general trend in coding skills; COBOL has not vanished and is still being developed today – the latest update was in 2023, and it is estimated that even now, 70% of all financial transactions around the world touch a COBOL system at some point in their processing cycle.)

Then, in the mid-90s and as a direct consequence of Y2K, a massive amount of COBOL expertise was required in a very short time to correct the issues arising because of the millennium bug. This led to a huge skills shortage which highlighted a profound vulnerability for many organizations: it wasn’t just code that had decayed over time—it was the institutional memory and skillsets required to sustain legacy systems.

Many institutions and businesses were reliant on systems that were developed using skills that they no longer had available to them and consequently they were at the mercy of market forces, with experienced COBOL developers coming out of retirement to almost set their own price to undertake remediation work on these aging systems.

This should have been a wake-up call for businesses to look beyond their surface needs

and plan for deep, cross-generational capability. This is where a framework like CMMI (Capability Maturity Model Integration) could have been helpful.

CMMI addresses skills, knowledge and experience needs in several places, most notably in the ‘Organizational Training’ practice area where it encourages organizations to:

Identify strategic skill gaps before they become crises

Establish training programs that evolve with technology and business goals

Continuously evaluate and improve learning to keep capabilities aligned with organizational direction

Since the release of version 3 of CMMI, the model has included several speciality domains and one of these, the ‘People’ domain, also includes a practice area called ‘Workforce Empowerment’. This includes practices which (amongst other things) ensure that knowledge retention and transfer, especially in niche but mission-critical areas, is planned and managed to preserve the corporate knowledge.

Finally, at a more fundamental level, the ‘Planning’ and ‘Monitor and Control’ Practice Areas include practices that encourage organizations to consider the knowledge and skills they need to implement specific planned activities and to then ensure that these skills are available when needed.

Had these practices been actively embedded into long-term planning, COBOL expertise might not have become a forgotten art—it would’ve been a maintained asset.

The lesson from Y2K is clear and timeless: technological resilience depends on human capability. Organizations often treat training as a tactical fix, but real strategic strength comes from an integrated view of skills, systems, and future demands.

Rather than chasing talent in emergencies, imagine businesses nurturing it quietly, consistently—planting seeds of expertise across generations, not just responding to weeds when they appear.

Don’t Panic – We have AI now…

Y2K was a quarter of a century ago – an age in the IT industry - and the world has moved on dramatically since then. Yet today we find ourselves at another important inflection point. The rise of AI technologies brings with it undeniable and alluring advantages: speed, scalability, predictive insights. However, just like the gradual dismissal of COBOL decades ago, there is a new but related risk: the erosion within organizations of their core technical knowledge as automation replaces their routine manual and decision-making processes.

While AI can enhance productivity and fill gaps quickly, over-reliance could potentially leave organizations dependent on systems they don’t fully understand and may not be able to fully maintain. The availability of humans capable of maintaining, troubleshooting and innovating beyond the boundaries of their primary AI capabilities may open them up to significant risks similar in essence to the Y2K bug.

Perhaps we need to encourage organizations to focus on stewardship of their intellectual infrastructure rather than purely outsourcing it to AI, by taking steps to preserve foundational skills in parallel with technological advancements and capturing institutional knowledge to prevent it from being entirely subsumed by automation in the quest for efficiency.

AI is a powerful tool which can be deployed as a huge ‘force multiplier’ to augment human expertise rather than replace it and this is especially important when we look at our legacy, mission-critical systems.

Just as COBOL programmers became digital lifelines in the late 1990s, today’s stewards of legacy infrastructure, low-level coding, and system architecture may become tomorrow’s emergency responders.

A Couple of Final Thoughts

In an age of rapid innovation, it’s easy to let old skills fade. But as Y2K taught us, what is “obsolete” today might be essential tomorrow. CMMI’s ‘Organizational Training’ Practice Area reminds us to treat skill development as a strategic asset, not a checkbox—because sometimes, the key to the future lies in understanding the past.

Finally, much as it would be nice to think that the Y2K crisis was a ’one-off’; a peculiar historical curiosity that is unlikely to ever be repeated, we should be mindful that in just 13 years’ time, a related problem will be upon us.

The ‘Year 2038’ Problem will affect legacy 32-bit Unix systems. Older Unix systems store dates/time values as a signed 32-bit integer which represents the number of seconds since 00:00:00 UTC on 1st January 1970 – often referred to as the ‘Unix epoch’.

That counter will hit the maximum value possible in this representation form at 03:14:07 UTC on 19th January 2038. One second later, it will overflow and what happens at this point is currently unpredictable and will depend on the system in question.

Most modern UNIX systems are now 64-bit and will be effectively immune from this issue, but there are still many legacy 32-bit systems around and the 32-bit architecture is still popular with embedded systems and IoT devices. Of course, we have 13 years to resolve this, so it’s unlikely to be a problem, is it? But maybe we should be mindful of the fact that the first documented warning of the Y2K problem was recorded in 1958 – 42 years before the date of expected incidence…

If you would like to learn more about CMMI and how it might help you to increase the resilience of your business by improving your underlying processes and operating procedures, then visit our website (www.casmarantraining.com) to learn about our training and consultancy services.

Comments